The Great Decoupling; Why the Future of Computing is Screenless

Introduction

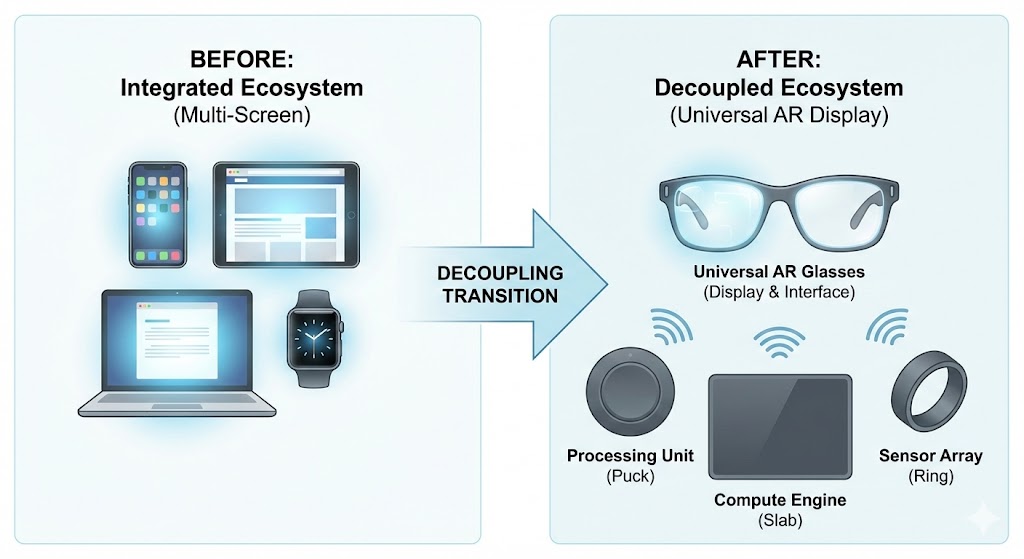

In the contemporary digital landscape, user interaction is fragmented across a diverse ecosystem of integrated devices—smartphones, tablets, laptops, and smartwatches—each necessitating its own dedicated display panel, embedded processor, and power source.

However, as high-fidelity Augmented Reality (AR) and Virtual Reality (VR) display technologies near maturity—exemplified by devices like the Apple Vision Pro and Xreal glasses—this multi-screen paradigm becomes increasingly problematic. The redundancy of maintaining multiple physical screens creates unnecessary hardware bulk, power consumption inefficiencies, and a disjointed user experience across different form factors.

Current XR Approaches

Current approaches to XR integration generally fall into two imperfect categories:

- Standalone Headsets (e.g., Meta Quest 3): These integrate high-powered processing and batteries directly behind the display. While offering untethered freedom, this inevitably results in heavy form factors unsuitable for all-day wear.

- Tethered “Immersive Screens” (e.g., Xreal Air): These offload processing to an existing smartphone via cable. This reduces headset weight but relies on a host device that retains its own functional, battery-draining screen, failing to resolve the hardware redundancy issue.

The Great Decoupling

The logical evolution of this architecture is a fundamental “Great Decoupling” of display and computation. To achieve socially acceptable form factors like standard eyewear, the display must become a pure output mechanism, separated physically from the processing unit. Consequently, the surviving computational devices in the ecosystem—laptops and mobile units—must evolve into screenless engines, dedicating their resources solely to driving the universal visual experience provided by the headset.

This decoupled architecture necessitates a complete reimagining of the current hardware landscape. As we can observe in Figure 1, categories defined by their screens face obsolescence.

Figure 1: The shift from integrated devices to a decoupled ecosystem. The tablet category, lacking a unique value proposition, is rendered obsolete. Surviving compute units (mobile puck, laptop slab) become screenless processors feeding data to the universal AR display. (Source: Hypothetical Diagram based on emerging trends).

The Input Challenge

Furthermore, this shift introduces a critical interface challenge. Relying solely on “touch-free” mid-air gestures lacks the haptic feedback necessary for precise, long-term interaction. The solution lies in a bifurcated approach to tactile input. Legacy peripherals like keyboards remain essential for stationary productivity.

For mobile contexts, the screenless compute unit itself must serve as a high-precision tactile controller. This allows for minimalist thumb-based input without requiring visual attention, distinct from the imprecise gestures shown in Figure 2. Simultaneously, on-device AI must provide contextual understanding of real-world objects—recognizing kitchen utensils or industrial machinery—to overlay procedural guidance directly onto the physical environment.

Figure 2: The Neo-Tangible Mobile Interface. Instead of unreliable air gestures, the screenless mobile compute unit functions as a physical tactile input device, allowing precise control without visual reliance on the controller itself.

Conclusion

The trajectory of personal computing is not toward integrating more technology into a single handheld screen, but rather decoupling the display from the computer entirely. By allowing surrounding devices to “go dark” and become specialized, screenless processing engines (supplemented by cloud computing for non-lag-critical tasks), the digital world can seamlessly overlay the physical one through a single, universal visual interface.